To put it simply, containers are a form of operating system virtualization.

You can use a single container to run anything from a small microservice to a larger application. Inside this container, you have all the necessary executables, binary code, libraries, and all configuration files.

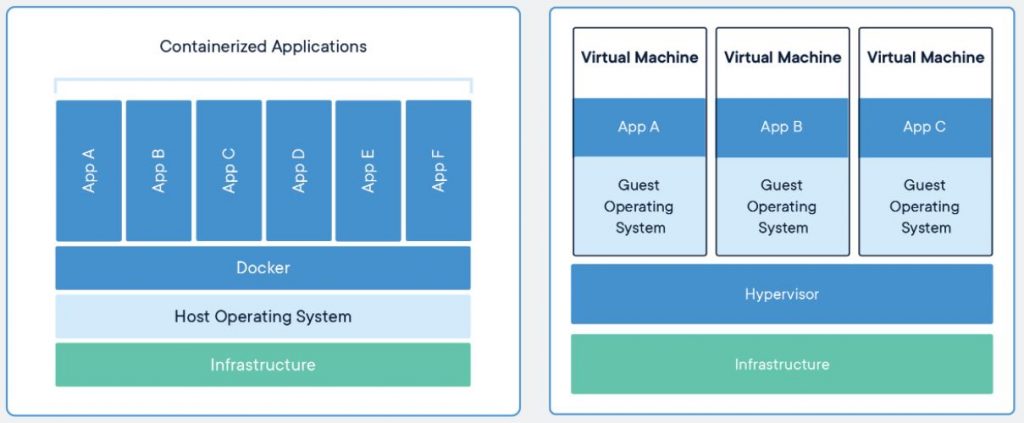

But comparing them to virtual machines or servers is not quite right, since they (containers) do not contain operating system images. What do you get? You get something lightweight and portable while at the same time having less overhead.

Even if I’m not going to touch clusters in this article, it’s worth mentioning that in larger application deployments you can deploy multiple containers as one or more container clusters. These clusters can be managed by a container orchestrator. (Kubernetes is a great example)

What is a container?

A container is probably the best solution to date when you need to get software to run reliably when moved from one computing environment to another.

From you developer’s laptop to a staging site or even into production.

And we’re not talking just about software. (example: you developed something on PHP 7.4 and your production server is using PHP 5.6) We’re also talking about problems like the network topology, security policies and storage differences. A lot of things can go wrong when moving stuff around. A lot!

Ok, but how does a container solve those problems?

By simply containing the entire runtime environment into one package, the difference in OS distributions, other software on that machine, and underlying infrastructure are abstracted away. Quite awesome, wouldn’t you agree?

What is Docker?

Docker is a software platform for building applications based on containers.

While containers as a concept are nothing new, Docker helped popularize this technology continuously since 2013. Docker basically put more eyes on containerization and microservices in software development – we would refer to this today, as cloud-native development.

Docker is an open-source project/open platform. In my opinion, this helped a lot. And while originally it was built for Linux, today you can run it on Windows and even MacOS.

Is a Container a virtual machine?

In short, no.

People tend to confuse container technology with virtual machines (VMs) or even server virtualization technology. Even if there are some basic similarities, containers are another type of animal.

The actual idea that a given process can be to some degree isolated from the rest of it’s OS has been build into Unix operating systems such as BSD or Solaris for a while now.

The original Linux container technology – LXC, is an OS-level virtualization method for running multiple isolated Linux systems on just one host.

Containers decouple your application from the operating system, which means that you can maintain a clean, minimal Linux OS and run everything else in one or multiple isolated containers. With the advantage that because the OS is abstracted away from your containers, you can move them across any Linux server that supports the respective runtime environment.

Here’s a helpful image to visualize why a container is not a virtual machine:

If VMs or containers are better for your use case scenario, or what technology is more appropriate for your end goals is not a subject of this article. We’ll talk about this soon in another article.

What are the benefits of containers?

Below you’ll find just some of the benefits of using containers:

- Increased portability – it’s amazingly fast and easy once you dive in to actually deploy a container to multiple hardware platforms.

- Less overhead – as a container does not include the OS image, it requires fewer resources.

- Consistency – this is a big one! A DevOps can rest assured as he knows the container will run the same, regardless of where it is deployed.

- Efficiency and better development – containers basically allow applications to be rapidly deployed, patched, and even scaled. There’s a lot of flexibility involved.

Containers use cases

Giving an example for each scenario can take a while. But I’ll outline some often seen use cases of containers.

Easier deployment of repetitive jobs and tasks

I’m sure there are people doing far more complex things that what I do with containers.

But a fairly easy-to-understand example is deploying in one click, a new WordPress installation, with some specific customizations of mine and certain plugins preinstalled. (one container for files and one for the database). So when I need to test something, or even create a new project for a customer and proceed to work, I don’t have to manually do everything that involves getting to the point of actual work in WordPress, or on a plugin/theme.

Oh, and I can create as many clones of this ‘template’ as I need. Doesn’t sound like a big deal until you manage new clients often or you need to test something without breaking anything. Or heck, you just want to experiment on something real fast.

note: the ‘one click’ in my example actually consists of more than just using Docker, it actually involves using a reverse proxy and some specific apps that make working with this whole thing easier… especially if you need to have HTTPS and other stuff available. We’ll talk about that in another article and I’ll be sure to link it here once it’s done.

Conclusion – VM’s or Containers?

As usual, it depends. I will touch on this subject in a separate article as it can get quite complicated sometimes especially when going beyond a simple development environment or a home lab.

To end this article, I urge you to try Docker and the whole container thing if you haven’t already. I promise it’s not just smoke and mirrors or hype, and it’s quite an amazing and efficient piece of software. There are a lot of scenarios that are perfect for containerization, and your productivity will improve greatly if you map everything well before creating your ‘perfect environment’.

So, if you were on the fence about containers… yes learning as much as you can about them is useful. And yes they can help in a lot of scenarios. Thanks for reading!